>_

DATAGATE/World’s First NASA AI Astronomical Research Data Sculpture Permanent Public Art

~Nanjing_China_2018

>_

STATEMENT >

KEPLER: 9.6 Years in Space_2662 Planets confirmed_61 Supernovae Documented_530,506 Stars observed

The installation consists of 3 parts; Form, Light and Space. Light is world’s first artwork based upon the idea of utilization of Machine Learning in the context of space discovery and astronomical research through NASA’s Kepler Data Sets.

By using the Kepler data from NASA, the public will be able to observe the exoplanets [planets that orbit around other stars] which human life can exist in. By taking this concept one step further, Ouchhh aims for this artwork to be considered as a gate between our planet and other habitable planets around the universe.

>_

STATEMENT .

STATEMENT .

Ouchhh will visualize and stylize the findings of these Neural Networks for identifying exoplanets using the dimming of the flux. The resulting work will invite visitors to plunge into the fascinating world of space discovery through immersive data sculpture. The installation will offer a poetic sensory experience and is meant to become a monument of mankind’s contemplative curiosity and profound need for exploration.

360 LED Sculpture _ weight: 15 tons

>_

AWARDS

2019_Muse Creative Awards USA _DataGate_Experimental_Immersive Category

2020_AVIXA Awards USA _Best In-Person Experience and Best Dynamic Art Experience Award

2020_ASIA DESIGN PRIZE SEOUL _Gold Prize

Can you fit the whole universe into just one simple cube?

+

+

+

^

+

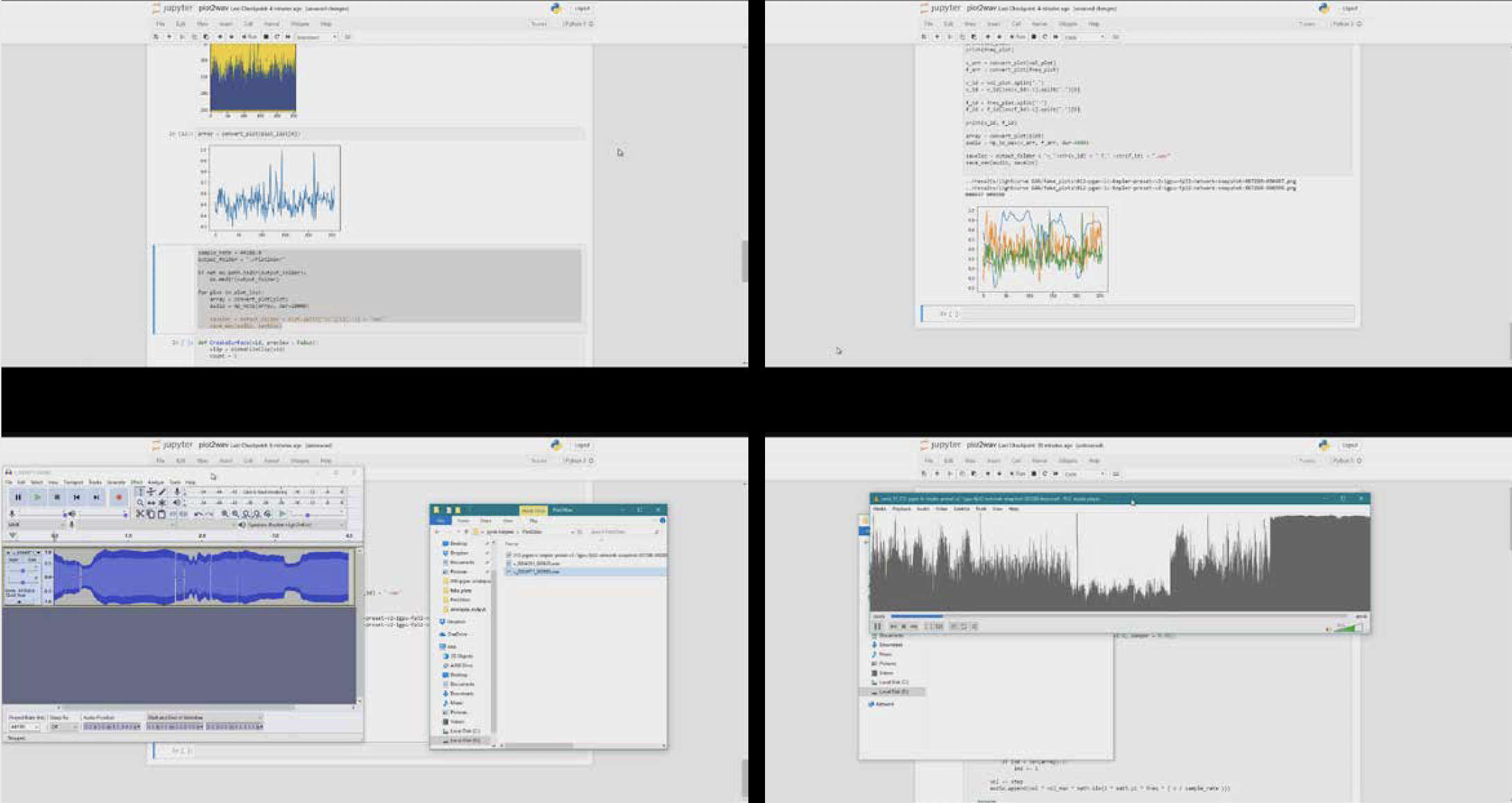

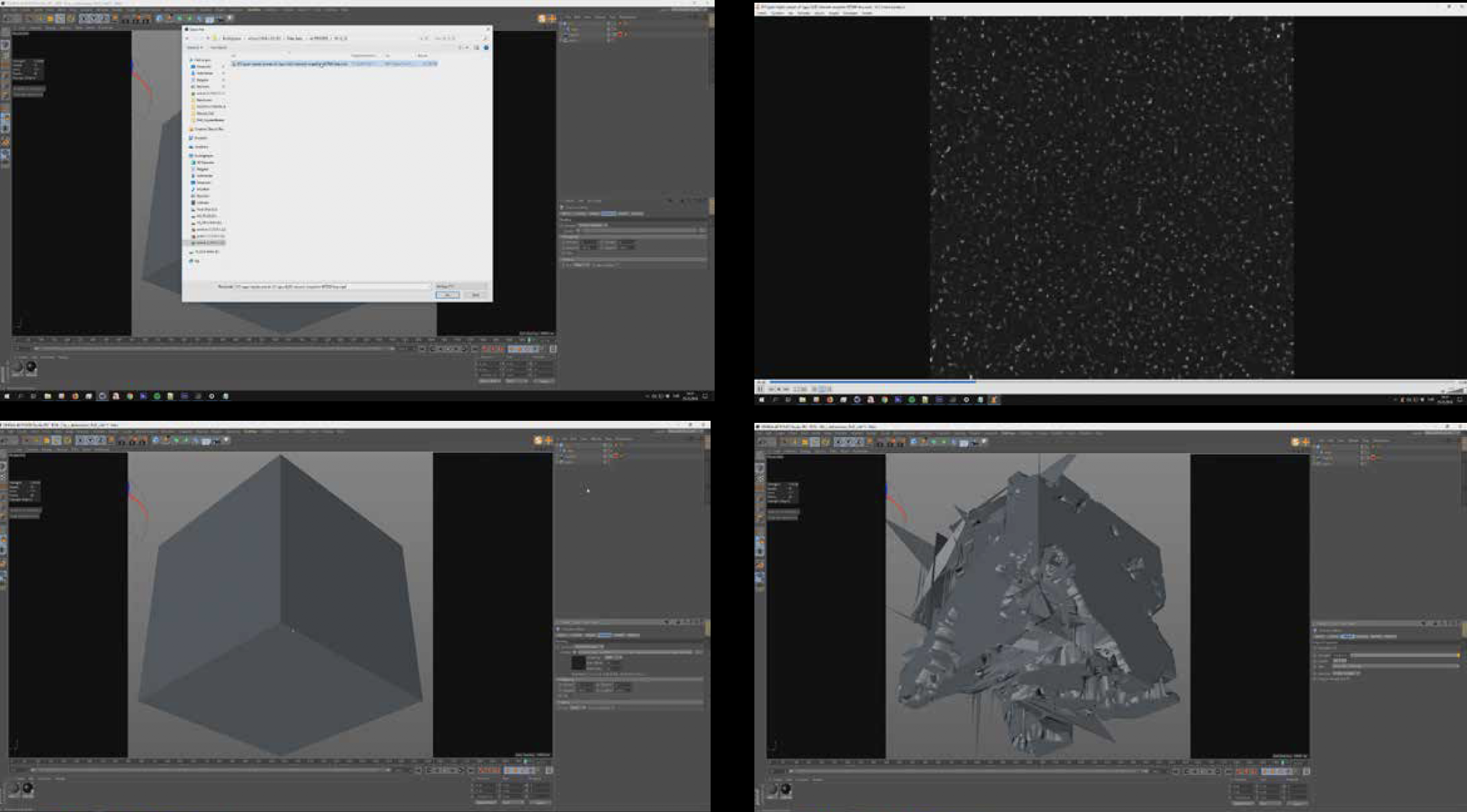

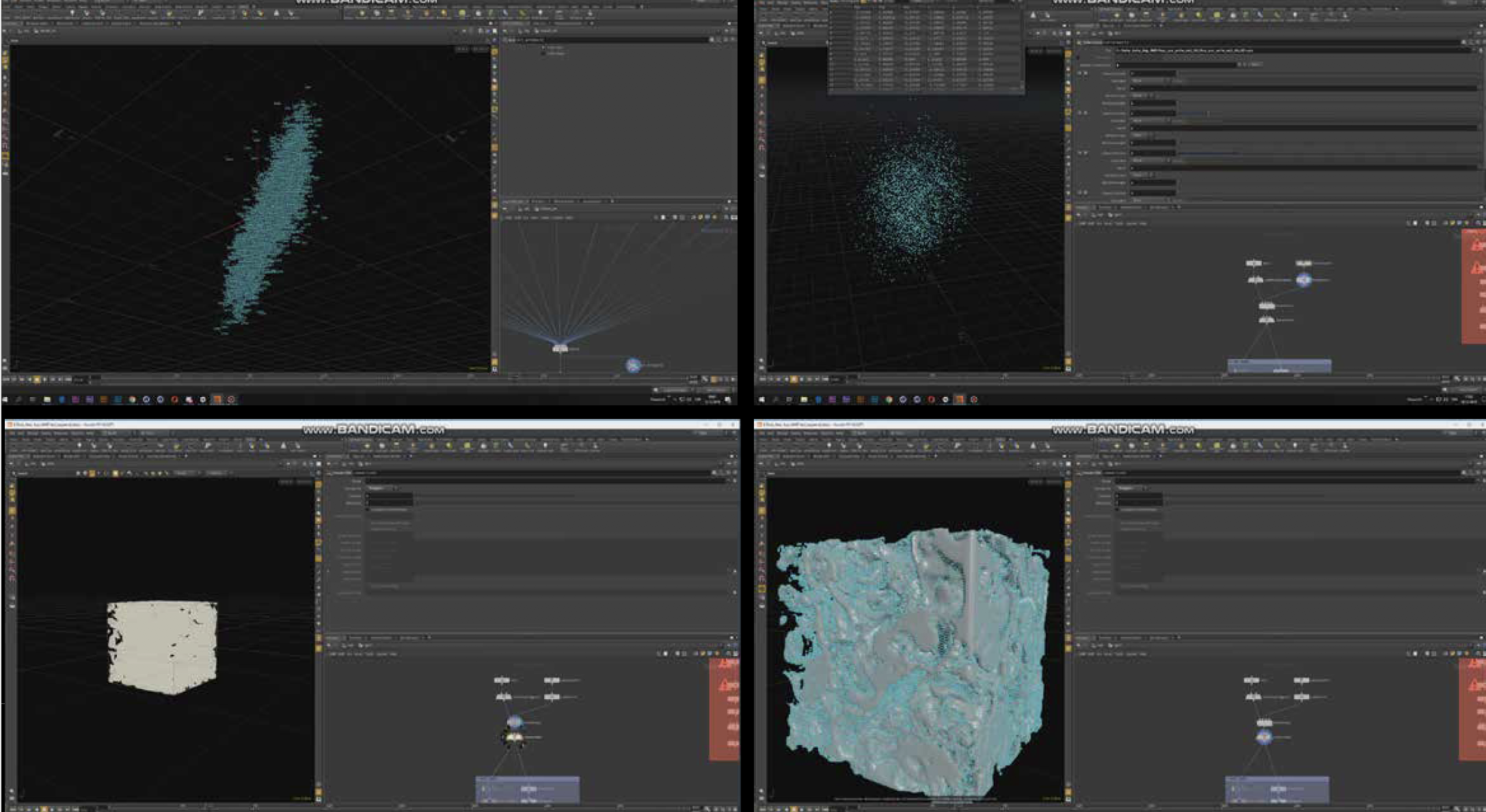

~Process/Scientific Data Journey of DATAGATE

>_

AI DATA FLOW

>_

watch

>_

What is the background of this work?

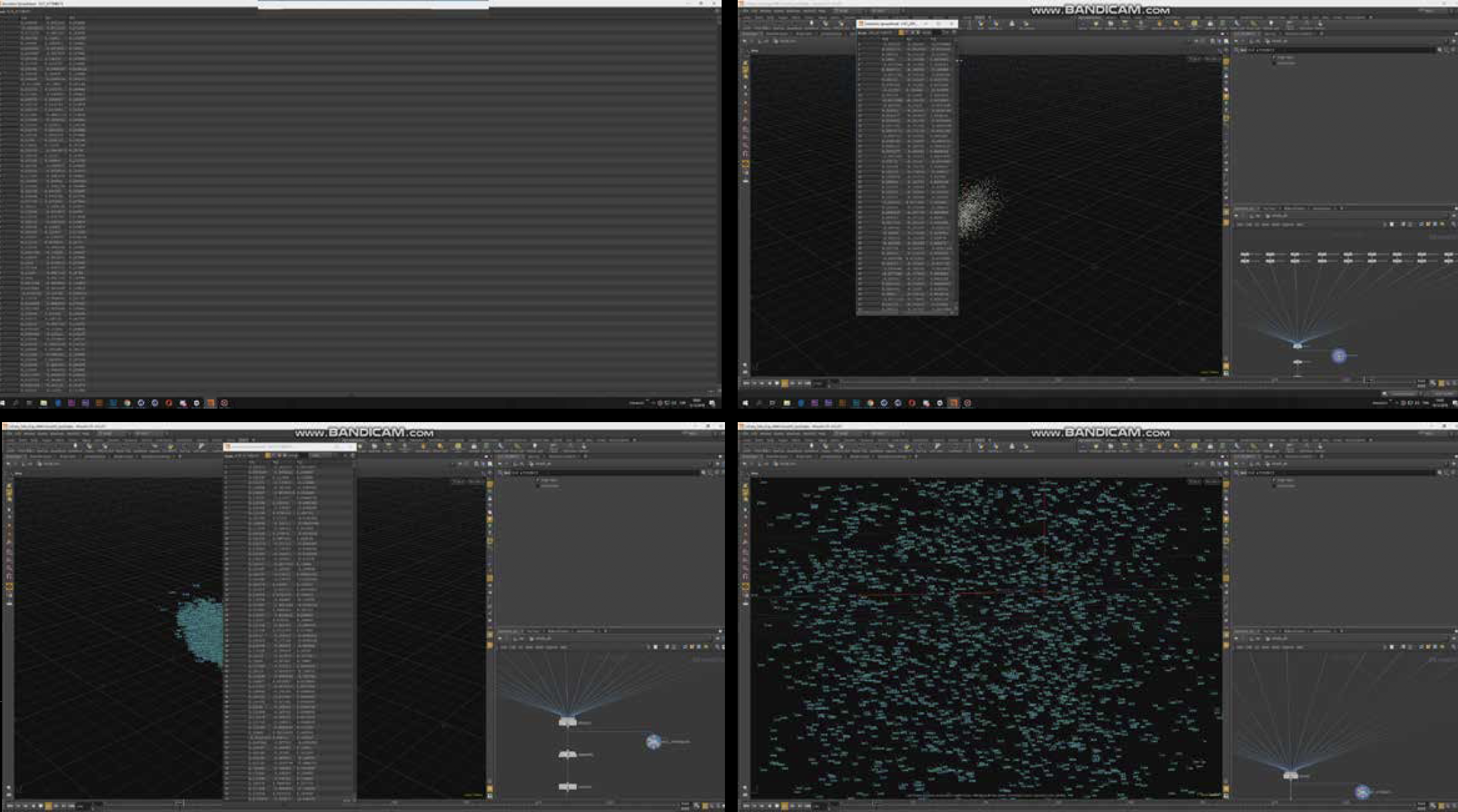

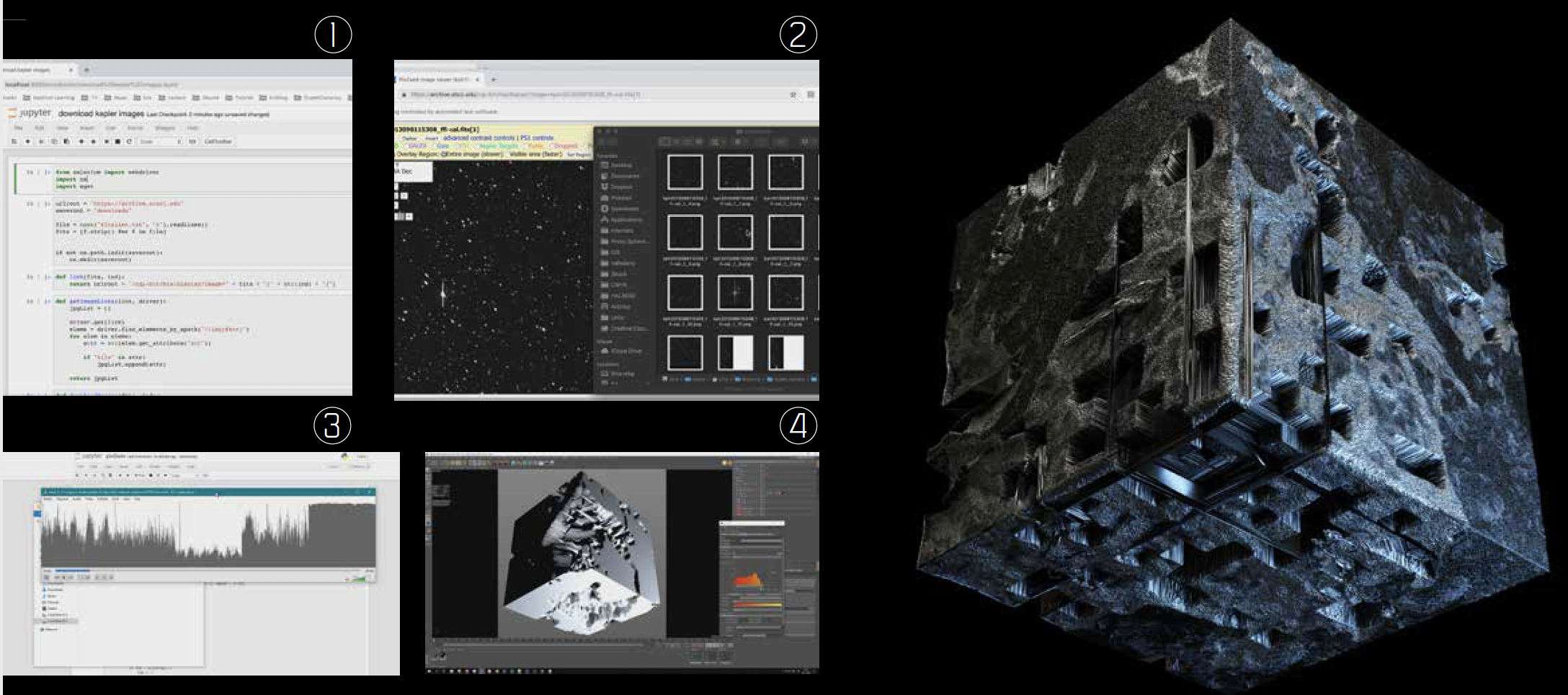

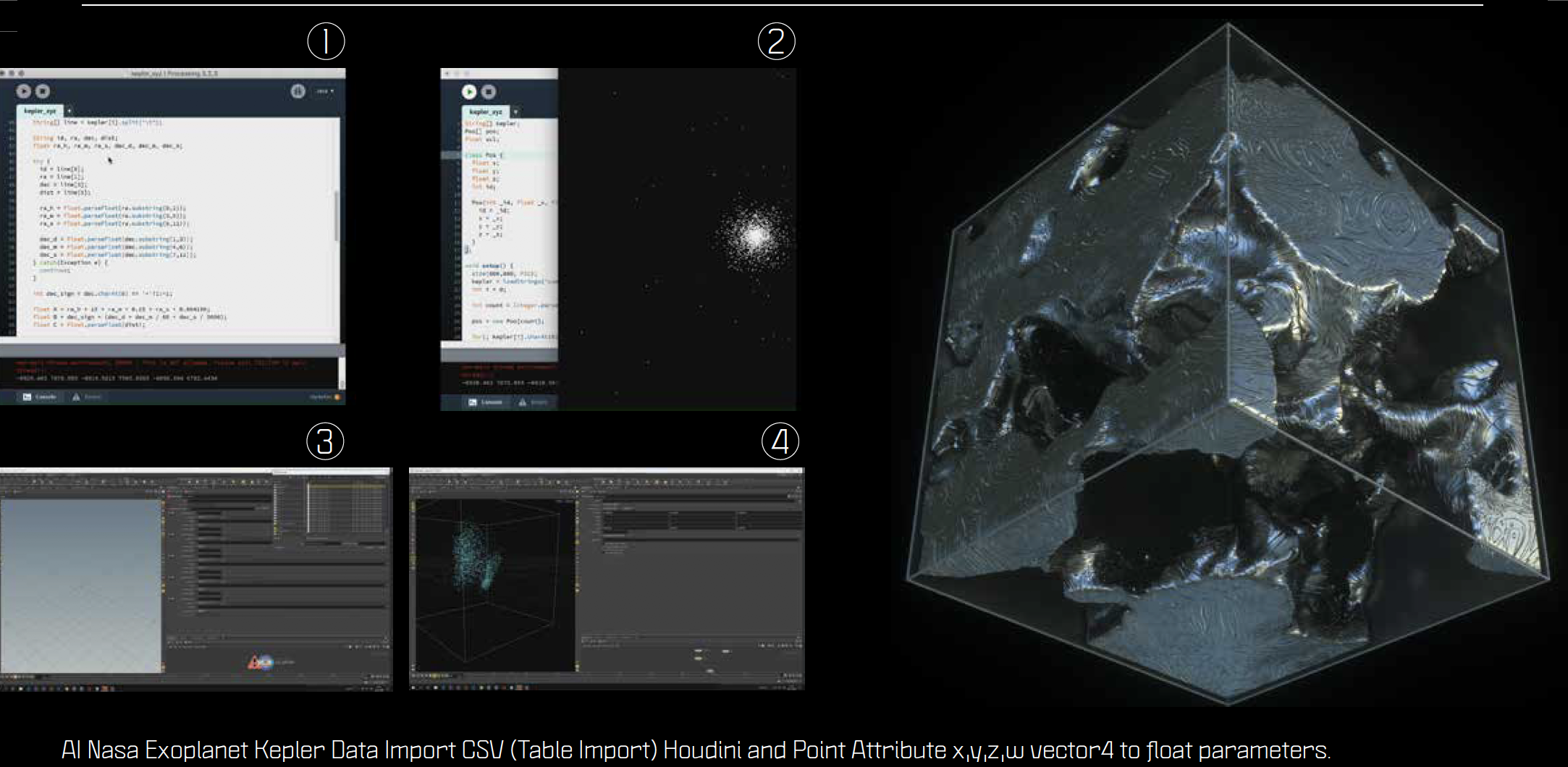

Data Gate by Ouchhh New Media Studio, proposes an aesthetic approach to Artificial Intelligence powered exploration of the Kepler Data. The data of Kepler mission is a very large spreadsheet comprised of information dedicated to the detected exoplanets. Each table in these spreadsheets contains around a hundred columns. Each of these columns represent an aspect, a feature of these planets. In addition to these spreadsheets, the publicly available data includes the raw light curve readings, which were used in the detection of the aforementioned planets. This data doesn’t readily make these planets detectable, but various AI methods have been used to analyze and extract planetary data from these light curves. Due to its complexity and size, it is impossible to visualize these as is. So we propose an AI based approach to interpret and aestheticize this information. Our aim is to analyze this data through AI, strictly for an alternative, visual representation of this data. This project does not and cannot propose to detect new planets, which has been excellently done and publicly made available. One of the techniques we will utilize is called “dimensionality reduction”.

As an example, if an exoplanet has 90 different information, it means that this information is “90 dimensional”. To reduce the number of dimensions, a neural network is trained on these columns to find relations in between. This way, not only hidden relations in the table are revealed, but also this 90D information becomes visible in three dimensions. Another technique we proposed to use is called Recurrent Neural Networks, best suited for sequential data such as stock values, musical notation or written text. As an example, this technique can be used to train a neural network on all of Shakespeare’s pieces to generate Shakespearean texts.

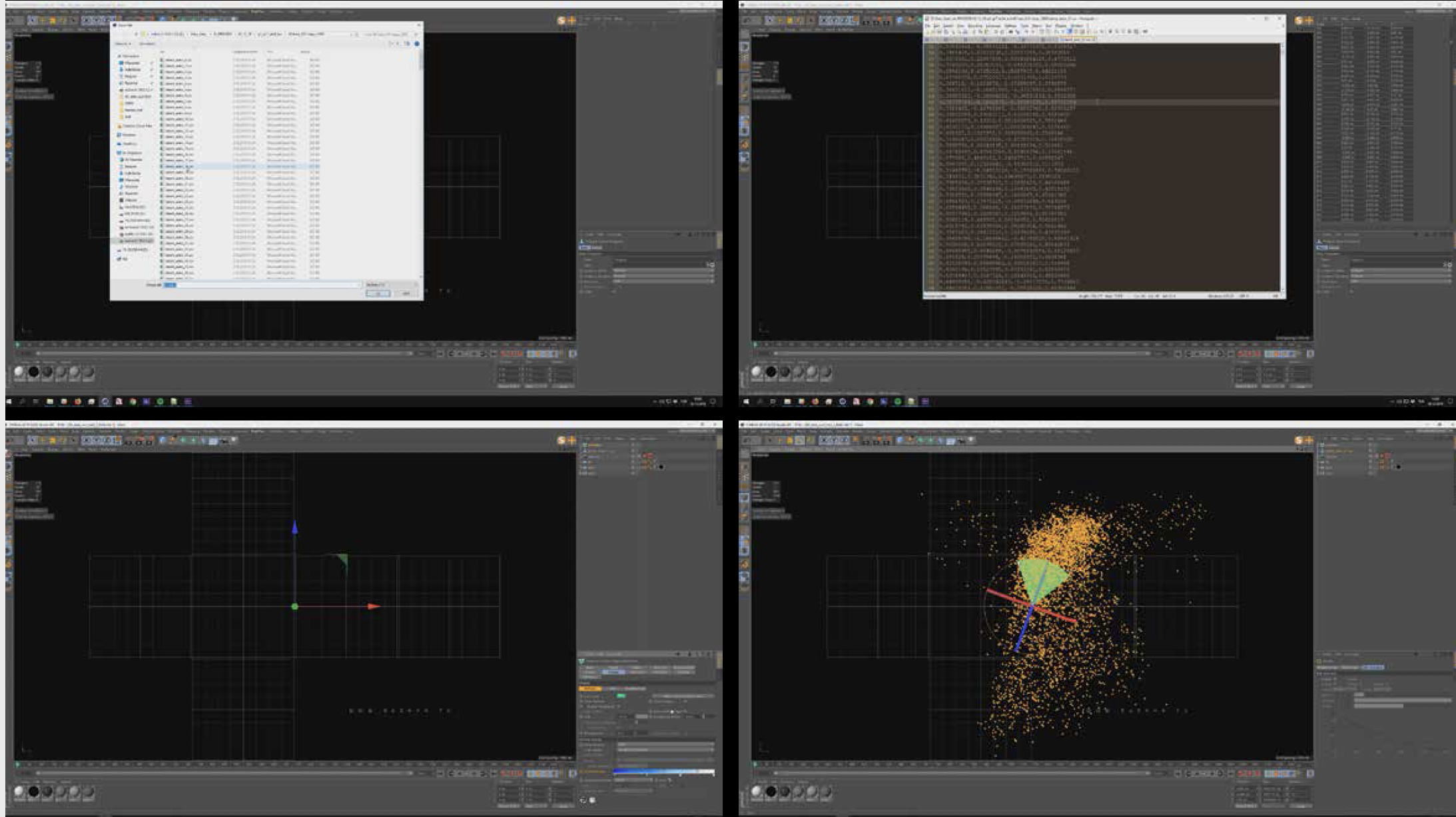

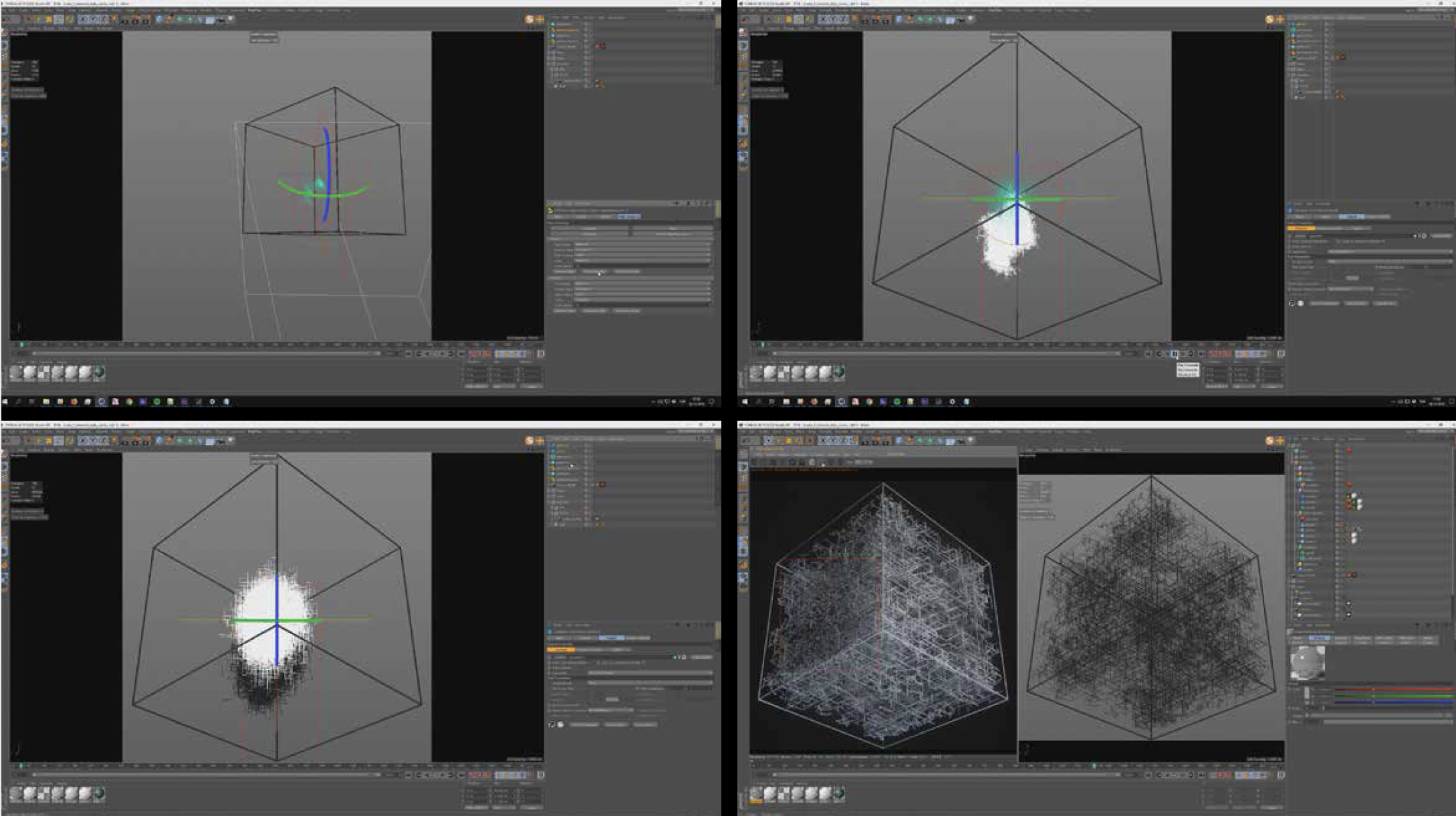

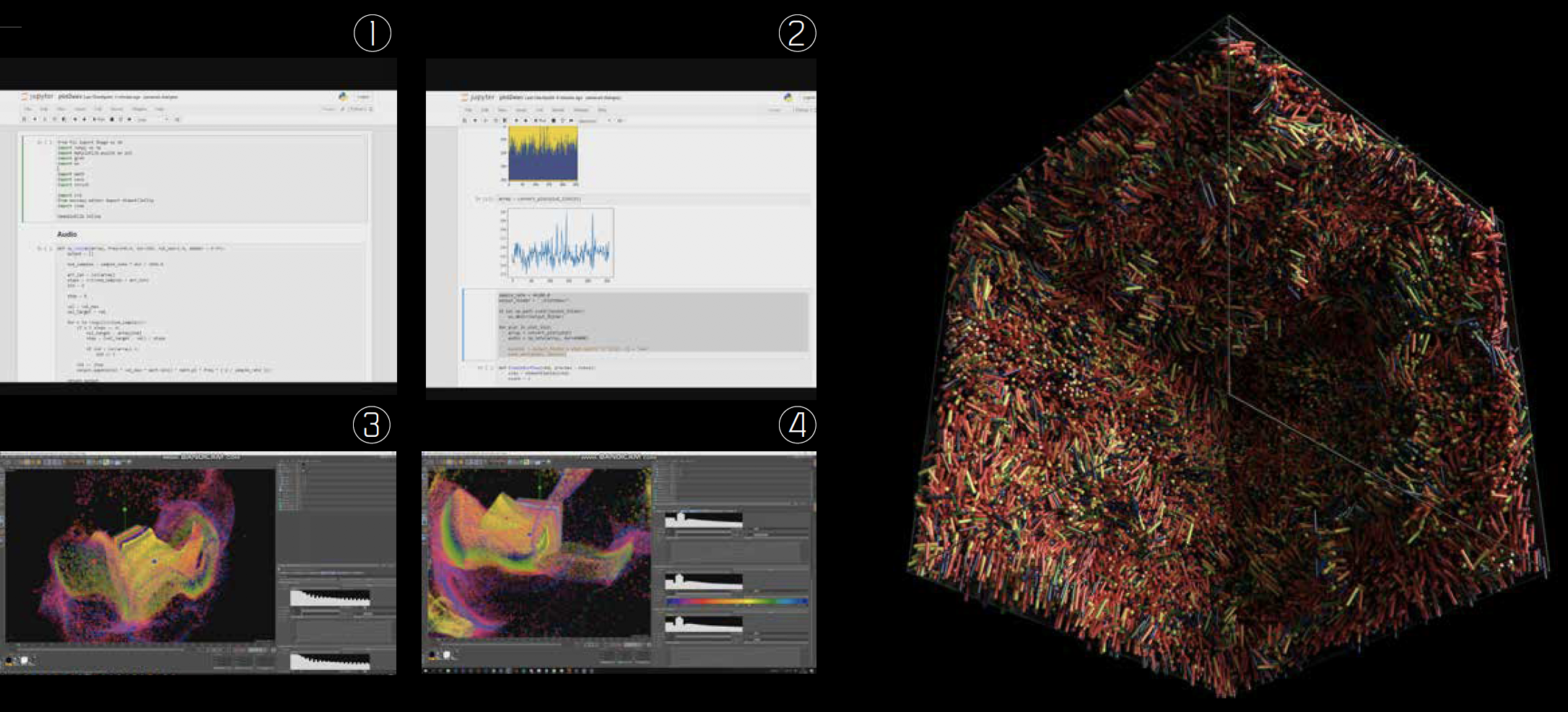

>

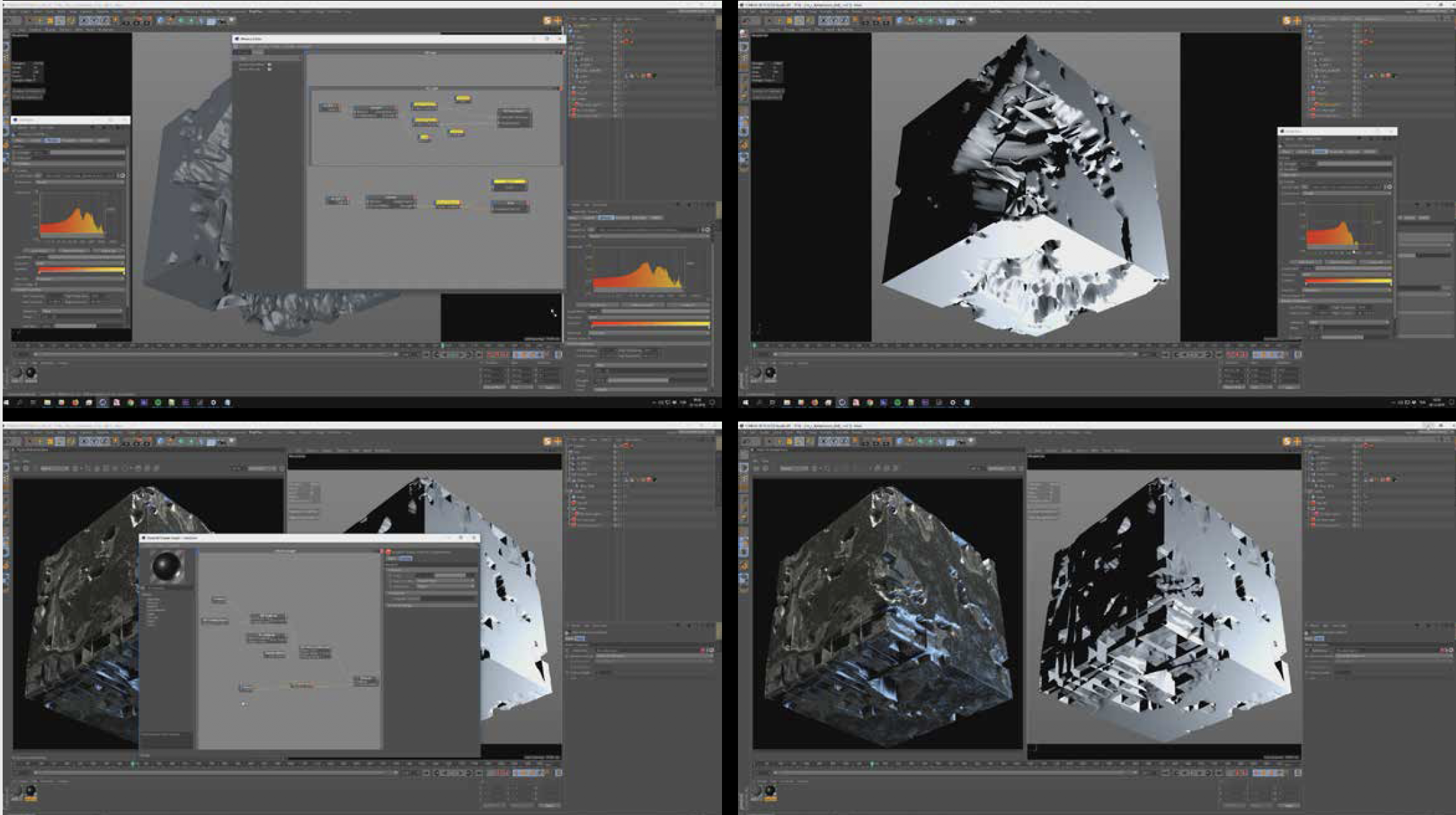

Just like music or and any text, light curve data contains a repeating, sequential and ultimately predictable values. To this end, we use RNN to generate “hypothetical” stars with hypothetical light curve values; what if scenarios of non-existing stars. Instead of exporting planetary data from this information, we generate our own light curves for visualization. The results of RNN training inspired us to try out different, more experimental techniques, which will be discussed in the following paragraphs. Both in dimensionality reduction and RNN, the output becomes 2, 3 or 4 dimensional data. We use this information in number of ways, such as directly visualizing it as point cloud, or in more implicit ways, such as using it as main controllers for animations. A good example would be Perlin noise: For volumetric cloud based renderings, instead of using random motion, we use this multi-dimensional output as main parameters of the noise; thus converting light curve data or hidden exoplanet relations to a sequence of a Perlin noise.

Lastly, we propose to train a GAN type network on the star-scapes captured during the Kepler Mission. By scraping through the publicly available star images, we have accumulated a vast library of images, featuring distant star maps. Since these images are taken away from Earth, the star positions - and by extension any formation resembling constellations - are inherently alien. By training a neural network on these images and generating our own, we propose to create imaginary night skies based on different locations in the universe.

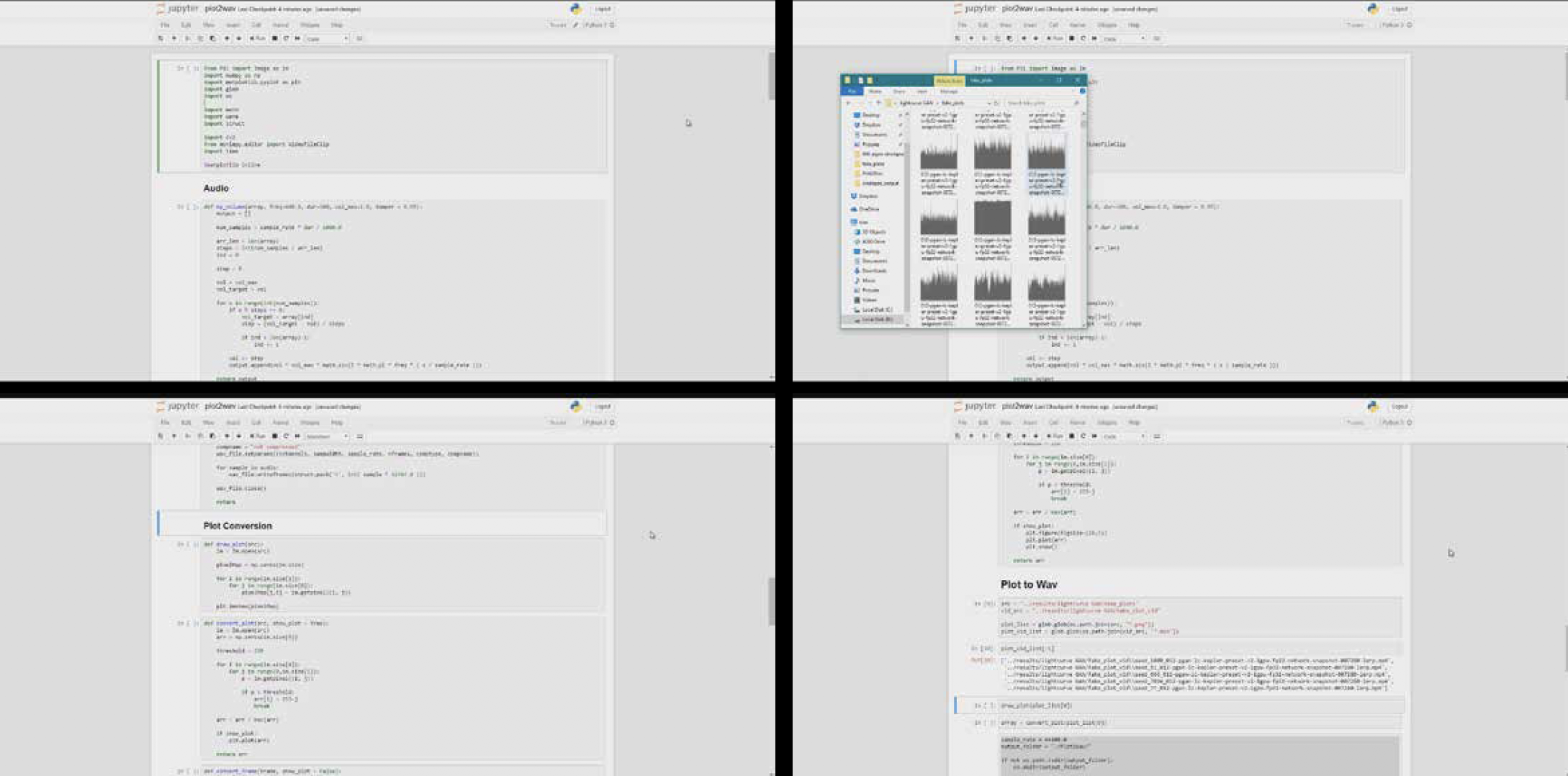

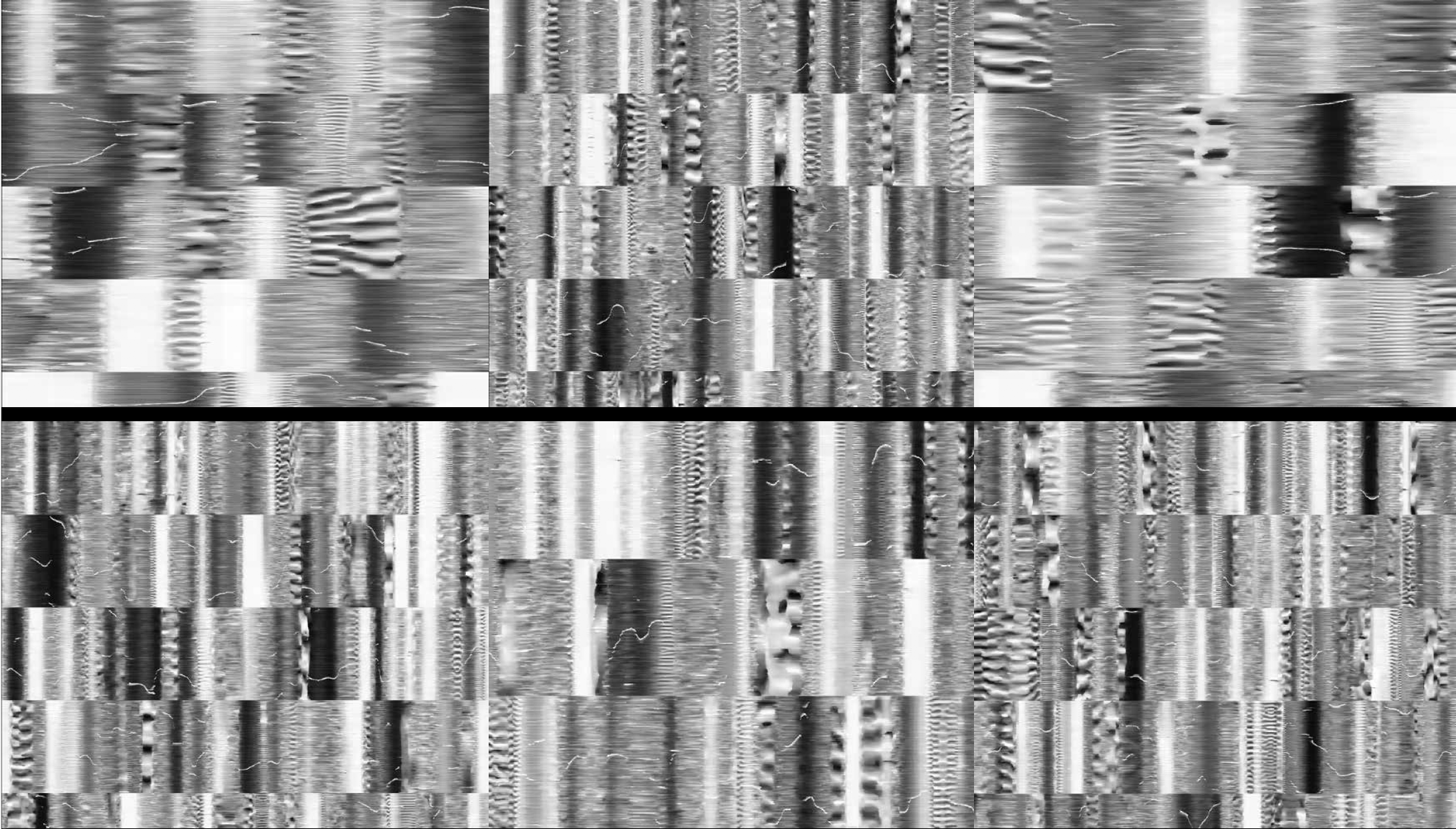

>.

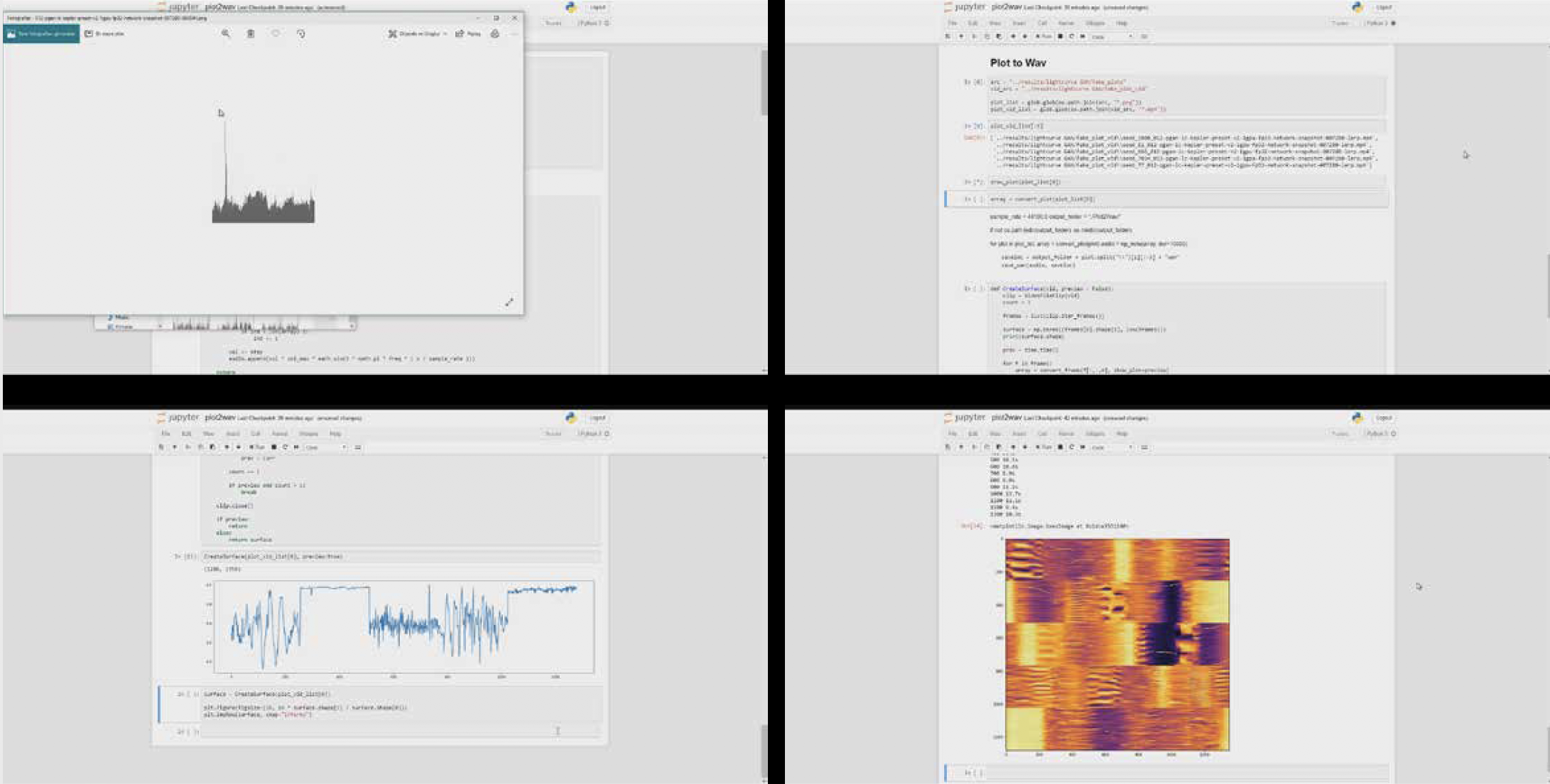

As a result of our preliminary experimentations, RNN did not prove to be fruitful, as the lightcurves of individual stars are highly repetitive, while the signature of the patterns are very diverse amongst stars to be interpreted as a single, sequential data that is needed for RNN. The results of single-star lightcurve pattern are the exact replica of itself, while a cumulative training quickly converges on to a static value without producing any curve at all. Instead, we utilized a more unconventional solution of treating the lightcurves as images: using the plots they produce to train a GAN for image generation. The results have led us to further explore the possibilities of creative applications of AI.

The output of this training, i.e. continuously morphing lightcurve videos, is converted back to numerical sequences. This not only let us use them in various forms such as music and animation, but also we have transformed these videos into 2D “topologies”, where each column of the image is a rendering of a single frame on the video. The poetic connection we aimed for is generating hypothetical “terrestrial planet surfaces” from the lightcurves, which were used to find terrestrial planets in the first place. Finally, these surfaces are once again used to train a GAN network to create morphing, living and ever-transforming terrestrial surfaces for imaginary planets.

^